Application Resilience with Multi-Cluster API Gateway Failover Routing

Architecting highly available and resilient applications means that teams must design for failure by accepting that outages (planned or unplanned) can occur anywhere in the hardware, software infrastructure, and service layers. Teams are then able to consider different implementation approaches that will help them maintain their desired SLAs and minimize service description. One approach is to scale services across different clusters (maybe even sites and regions) and configure cross-cluster routing policies in case of a failure.

Recently we announced Gloo Federation (Gloo-Fed) to streamline configuration and traffic management for multi-cluster Gloo API Gateway environments. Gloo Federation provides a single pane of glass to view all services across clusters and clouds, centrally configure Gloo instances individually or in groups, and connect the Gloo instances to provide failover capabilities and location-based routing. In this blog we will deep dive into how failover routing works and a tutorial so you can try it yourself.

Failover Routing in Gloo Federation

Gloo Federation works as a typical Kubernetes-native application with Custom Resources and Controllers with no additional infrastructure or custom configuration formats. The Gloo Federation Controller is installed and the desired clusters are registered to the control plane for management. At that time Gloo-Fed can not only see all the existing services and configurations, global configurations, but also define global configurations and policies at the multi-cluster level and pushed down to the individual control planes.

An example is the Failover Scheme policy, which is a Gloo Federation configuration object for administrators to specify a series of fallback traffic destinations for a given upstream whenever it enters an unhealthy state.

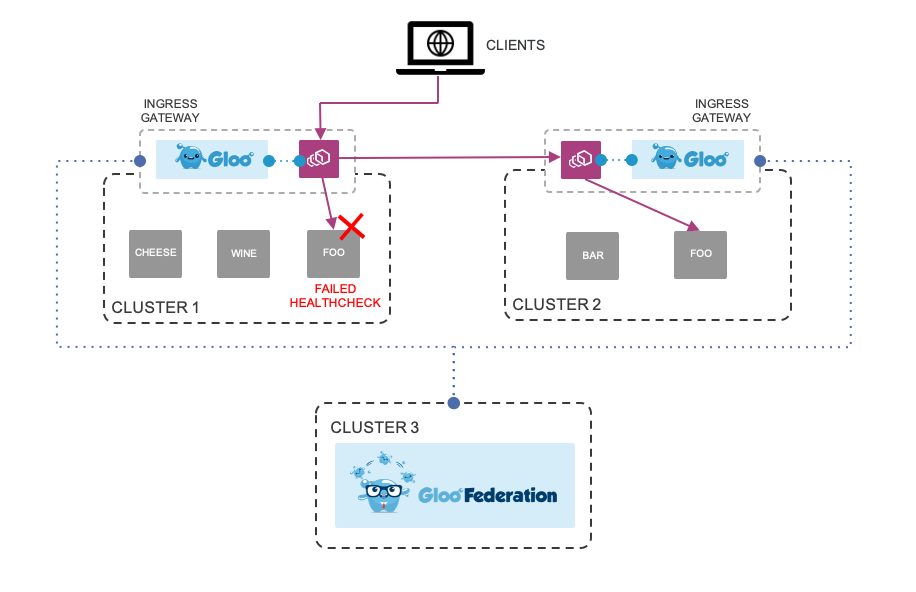

The diagram below illustrates at a high level how it works with the Gloo Federation control plane installed, two clusters registered and services deployed to the clusters:

- Global failover routing configured in Gloo Federation and pushed down to each Gloo Gateway on Clusters 1 and 2

- Client sends request to Foo on Cluster 1

- Meanwhile health checks fails for Foo on Cluster 1

- Cluster 1 Gloo control plane routes traffic to Cluster 2 Gloo Foo service

Security is enabled through mTLS between the separate clusters that encrypt the traffic. Two commands are used to generate an upstream and downstream CA certificate and key. Once generated, they are placed into the clusters as secrets for Gloo to access them. Because the clusters are physically separate and maybe even in separate data centers/clouds, securing the communication between them is essential to minimizing attack vectors.

Health Checks are a critical part of the failover process in order for Envoy to determine if the primary endpoint is in a good state or not and can be set up with just a few lines of configuration.

Prioritized Routing Order (Failover Scheme) allows for any number of upstreams from any Gloo instances to be configured in a prioritized order to be failed over to in case the original service is unhealthy.

Learn More

Try out the tutorial, here with a sample application to see how multi-cluster failover works for handling ingress traffic with Gloo Federation. If you have questions or need help, hop into the #gloo-enterprise channel in our slack. We look forward to having you try it out and to get your feedback.

- About Gloo open source and enterprise editions. Gloo Federation is available for Gloo Enterprise environments.

- Request a trial of Gloo Enterprise with Federation

- Register here for the upcoming webinar on Aug 13th