The MCP Authorization spec, as of the June 2025 update, has some serious challenges for enterprise adoption. In this blog, we highlight some of its foundational challenges, alternative proposals in the community, and sharing our opinion on what this should look like in enterprise environments.

Recap MCP Authorization Specification

The initial versions of the MCP Authorization spec treated the MCP server as a resource server and authorization server which issues tokens to MCP clients. This has a number of problems for enterprises, the biggest of which is enterprises don’t treat backend systems as authorization servers. Enterprises already have authorization servers (identity providers) and treat backend systems, APIs, data, etc as resources. To make any traction, the spec would have to relax this requirement.

In June, the MCP community revised its specification to more closely align with the “resource server” approach. The challenge now is that the revised spec leans heavily on OAuth RFCs that are not widely used, not implemented consistently among established identity providers, and probably have no place in enterprises.

For example, the specification relies on things like MCP clients using anonymous dynamic client registration (DCR) to register to an MCP server in order to get access. The thought is an MCP server won’t know what clients wish to connect ahead of time, so the spec allow clients to dynamically discover, register and proceed with a flow that can allow access (following OAuth authorization code flow). The whole premise here is at odds with enterprise usage: enterprises don’t allow anonymous DCR, they want to know exactly who/what’s connecting to their MCP servers, and they don’t use authorization-code flows internally, especially anonymous ones

In many ways, the current MCP authorization specification is written for public SaaS style MCP servers. That is, anyone on the internet may want to connect to an MCP server (Atlassian, GitHub, etc) using some kind of public client (Claude, VS Code, etc). The challenges around identity providers not supporting some of the newer OAuth RFCs notwithstanding, this specification creates a non-starter situation for enterprises.

Alternative Proposals

The mismatch between the MCP Authorization specification and enterprise adoption is not lost on the participating members of the MCP community (or those trying to consume it!). For example, Aaron Parecki (co-author of the OAuth 2.1 spec) correctly points out that enterprises use SSO to manage access to their internal applications. They may also federate this SSO with external vendors (Slack, GitHub, etc). Company employees login to their systems (OIDC/SAML) with their enterprise credentials and this should be no different for applications that leverage agents. He proposes a sensible approach to connecting these identities across domains with an approach that involves token exchange and jwt-bearer assertions.

In SEP-1299 enhancement proposal “Server-Side Authorization Management with Client Session Binding”, Dick Hardt (co-author of the OAuth 2.1 spec) points out the current spec “represents a novel usage of OAuth that differs significantly from traditional patterns”. He points out that traditional usage has verified OAuth clients (vetted, used by trusted developers, registered in the Authorization Server, etc) and doesn’t rely on dynamic client registration. He proposes using HTTP Message Signatures to establish client sessions and demonstrate proof of possession. For functionality that needs user authorizations / identity assertion, then rely on an “interaction request” mechanism that the MCP server can use to request the user to login/consent. This way, all OAuth/SSO can be handled on the MCP server side and keep the clients simple.

Our Recommendation for Securing MCP Services

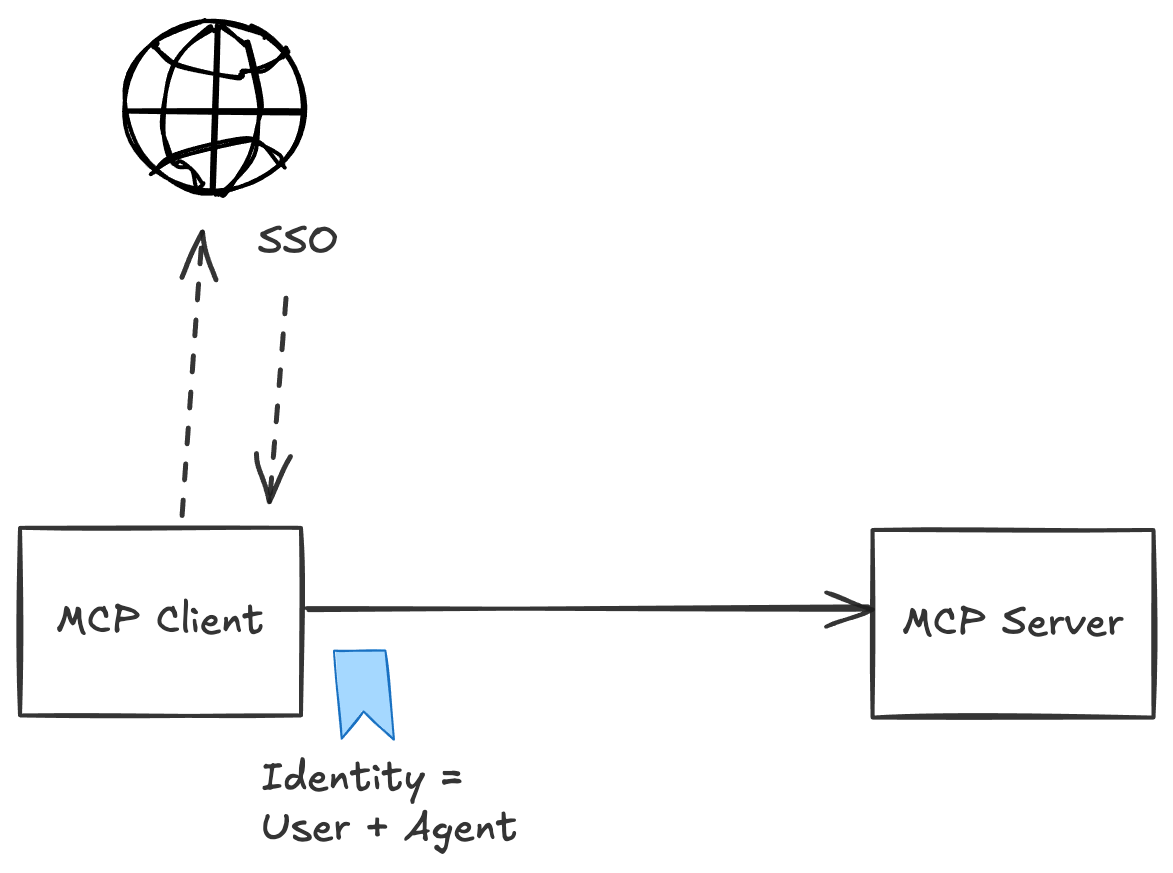

Inside an enterprise, things look very different. There is no “user grants access to the enterprise” step. The enterprise already authenticates its users through Single Sign On (SSO, OIDC, SAML, etc) and already knows exactly who they are. From there, the focus shifts to enforcing policy: who can access which systems, data, APIs, or MCP servers.

For an MCP service, that means authorization decisions should be made in the enterprise’s context. The MCP server needs to know: who is the user (if there is one)? What application/host/agent/service are they calling from? What caused the current call? And from there, it can make decisions according to enterprise policy. To do this, we need to be thinking “identity first”: both for the user and the calling agent/application.

Identity First Authorization

Instead of OAuth delegation flows, enterprises should leverage their existing SSO infrastructure. When an MCP client makes a request, the MCP server should receive the user’s identity context. This is usually a JWT (or SAML assertion which can be exchanged for a JWT) issued by an enterprise identity provider (MS Entra, Okta, etc). This token contains the user’s identity, group memberships, roles, and other claims already established in the enterprise.

The MCP server can then enrich this identity context with additional claims as needed (perhaps querying additional enterprise systems for department information, checking other tools for group assignments/permissions, etc). This enriched identity becomes part of the foundation for authorization. The other part is the workload/Agent/MCP client identity.

AI agents that call out to MCP servers should have their own cryptographically verifiable identity. These identities (for example SPIFFE is a good foundation for agent identity) can be used to construct durable, flexible policies about which systems are allowed to communicate. For example “the tax agent can call the calculate tax MCP server, but the reservations agent cannot, no matter who the user is”.

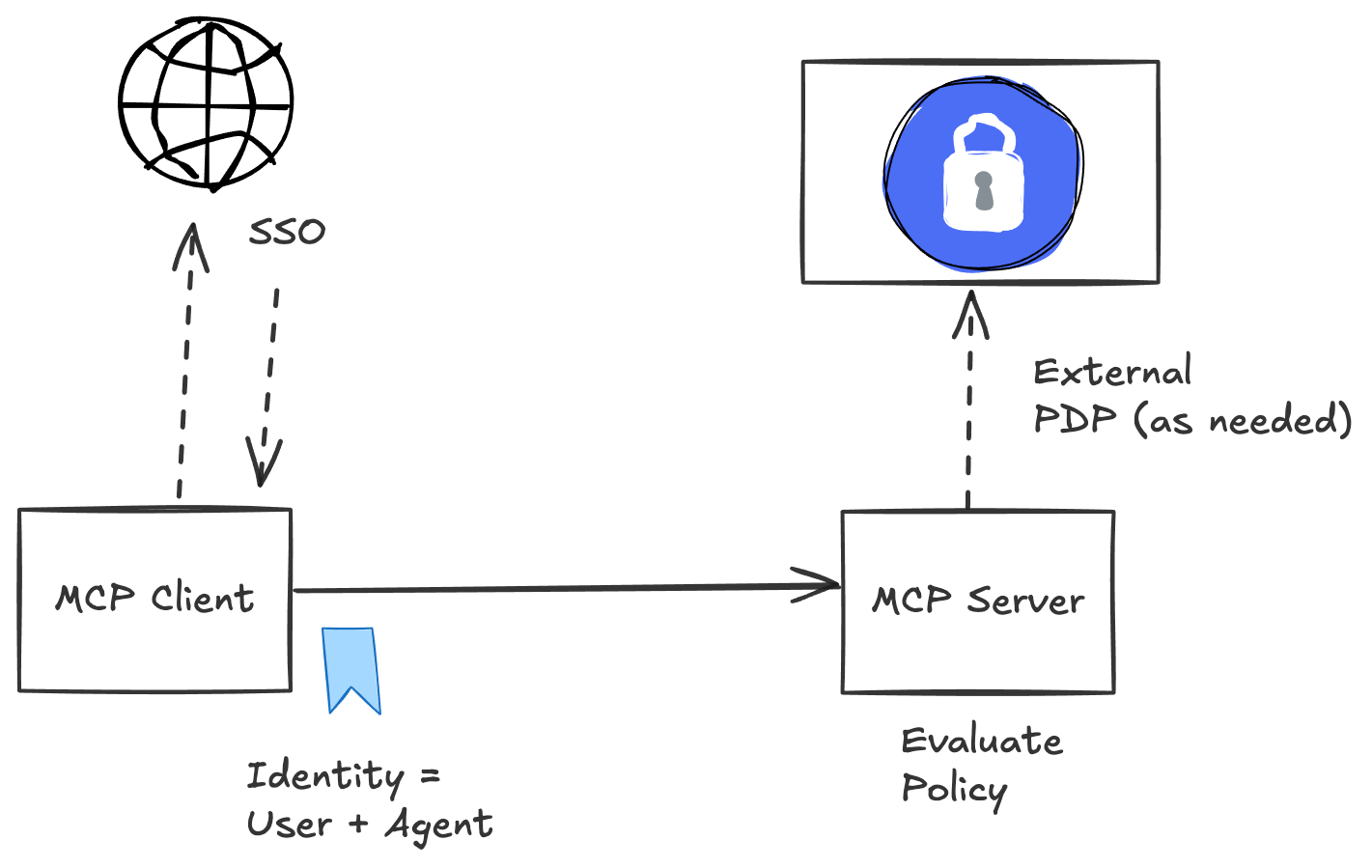

Policy Driven Access Control

Many enterprises have found that exclusively relying on claims in bearer tokens for permission verification is too limiting and not responsive enough to real-time policy changes. It’s a common security objective to move these checks into centralized IAM/authorization systems and to decouple access from code and application logic. These policies can consider multiple factors such as the user’s identity, the agent’s identity, the resource being access (ie, MCP server, tools, etc) in specific domains (customer data, financial, development/IT), the context of the request (such as the time of day, origin of the caller, device, etc) and others.

Policy engines like OPA or cloud-specific solutions can evaluate these consistently across all MCP servers. More importantly, these policy engines allow enterprises to quickly update policies and change things without having to wait for MCP servers or developers to make code changes. This has always been a major factor for modernizing workloads and is especially important in agentic architectures.

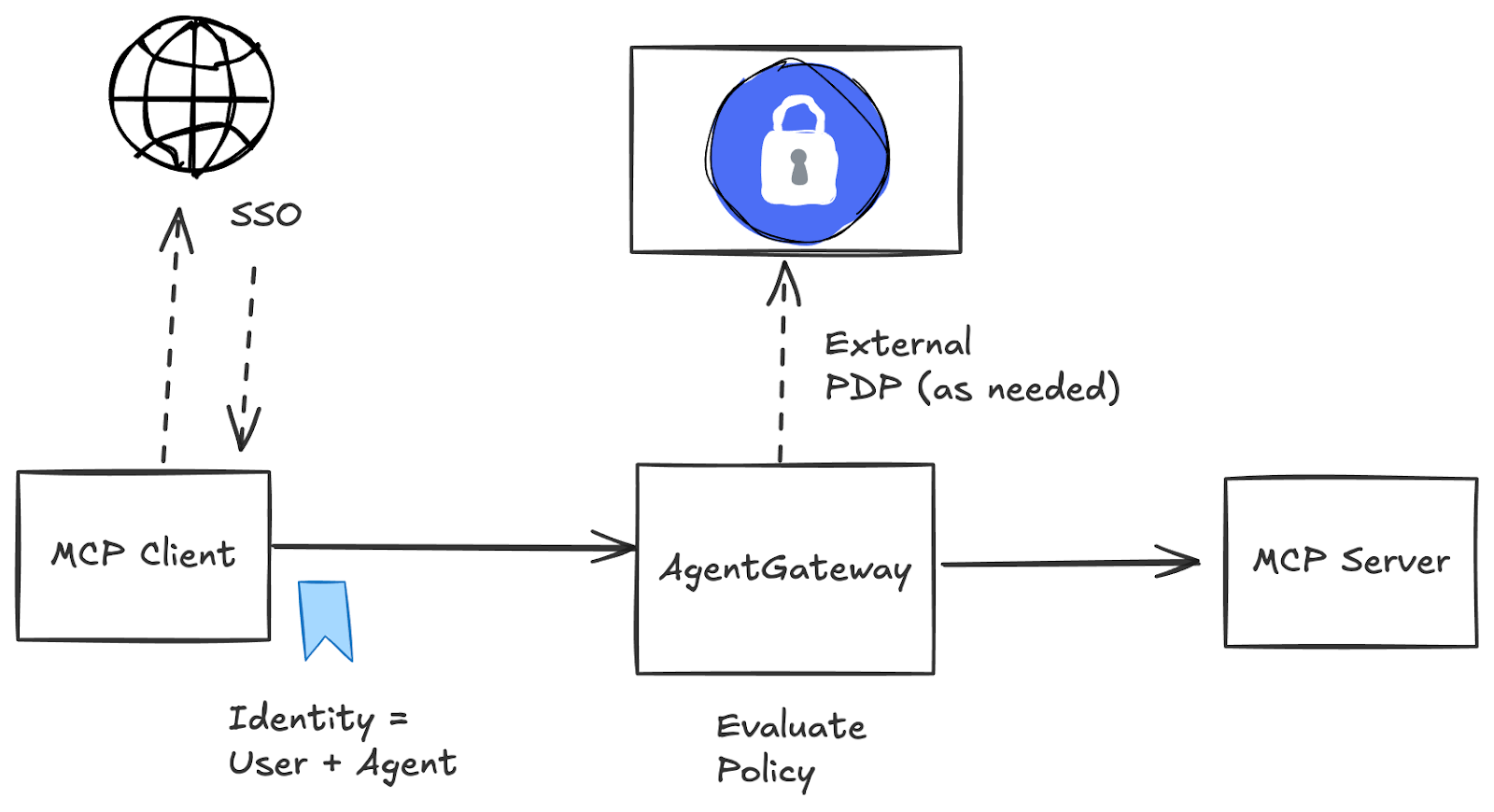

Offload Heavy Lifting to MCP Gateways

A lot of the heavy lifting to implement this approach can be offloaded to smart MCP gateways. For example, agentgateway an open-source MCP gateway, can handle many common auth patterns such as:

- Validating SSO tokens

- Enriching tokens / token exchange with additional claims

- Evaluating in-line access policies

- Calling out to external policy engines

- Logging/auditing/tracing of all MCP interactions

MCP servers will still likely need context from the authorization decisions performed by the gateway, and can use claims/tokens passed through after initial validation.

Benefits of this Approach

We know the MCP community is hard at work on revising the specification to eventually meet enterprise needs. We feel future updates will align better with our recommendations here.

We feel our recommendations here align with enterprise practices and expectations and avoid the operational overhead of OAuth flows. This can be done right now. This approach mirrors existing auth efforts for things like APIs, microservices, and cloud native workloads, and integrates better with existing enterprise identity providers. This approach allows dynamic policy evaluation based on rich context, provides clear audit trails linking user actions to business context, and reduces the complexity for MCP server developers.

%20a%20Bad%20Idea.png)

%20For%20More%20Dependable%20Humans.png)